前提

画像データを用いた機械学習を実装中にエラーが発生しました.

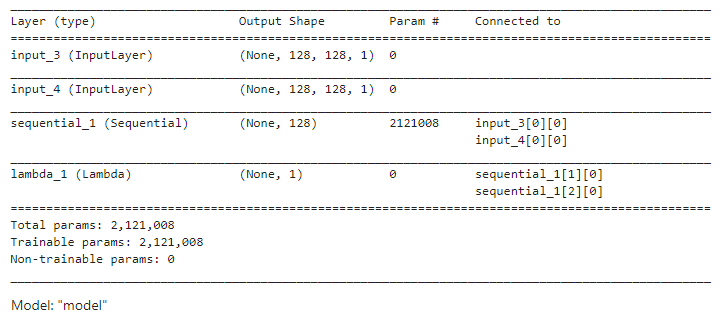

用いたネットワークはCNN,loss関数にはcontrastive loss関数を用いた,Siamese Networkという距離学習手法を実装しています.

発生している問題・エラーメッセージ

--------------------------------------------------------------------------- ResourceExhaustedError Traceback (most recent call last) <ipython-input-37-5dda512c50ab> in <module> 15 steps_per_epoch = 10, 16 validation_steps = None, ---> 17 validation_data=([te_pairs[:, 0], te_pairs[:, 1]], te_y)) 18 /usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/engine/training.py in fit(self, x, y, batch_size, epochs, verbose, callbacks, validation_split, validation_data, shuffle, class_weight, sample_weight, initial_epoch, steps_per_epoch, validation_steps, max_queue_size, workers, use_multiprocessing, **kwargs) 1637 initial_epoch=initial_epoch, 1638 steps_per_epoch=steps_per_epoch, -> 1639 validation_steps=validation_steps) 1640 1641 def evaluate(self, /usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/engine/training_arrays.py in fit_loop(model, inputs, targets, sample_weights, batch_size, epochs, verbose, callbacks, val_inputs, val_targets, val_sample_weights, shuffle, initial_epoch, steps_per_epoch, validation_steps) 152 callbacks.on_batch_begin(step_index, batch_logs) 153 try: --> 154 outs = f(ins) 155 except errors.OutOfRangeError: 156 logging.warning('Your dataset iterator ran out of data; ' /usr/local/lib/python3.5/dist-packages/tensorflow/python/keras/backend.py in __call__(self, inputs) 2984 2985 fetched = self._callable_fn(*array_vals, -> 2986 run_metadata=self.run_metadata) 2987 self._call_fetch_callbacks(fetched[-len(self._fetches):]) 2988 return fetched[:len(self.outputs)] /usr/local/lib/python3.5/dist-packages/tensorflow/python/client/session.py in __call__(self, *args, **kwargs) 1437 ret = tf_session.TF_SessionRunCallable( 1438 self._session._session, self._handle, args, status, -> 1439 run_metadata_ptr) 1440 if run_metadata: 1441 proto_data = tf_session.TF_GetBuffer(run_metadata_ptr) /usr/local/lib/python3.5/dist-packages/tensorflow/python/framework/errors_impl.py in __exit__(self, type_arg, value_arg, traceback_arg) 526 None, None, 527 compat.as_text(c_api.TF_Message(self.status.status)), --> 528 c_api.TF_GetCode(self.status.status)) 529 # Delete the underlying status object from memory otherwise it stays alive 530 # as there is a reference to status from this from the traceback due to ResourceExhaustedError: OOM when allocating tensor with shape[1392,64,128,128] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc [[{{node sequential/conv2d/Conv2D}} = Conv2D[T=DT_FLOAT, _class=["loc:@train...propFilter"], data_format="NCHW", dilations=[1, 1, 1, 1], padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](training_11/RMSprop/gradients/sequential/conv2d/Conv2D_grad/Conv2DBackpropFilter-0-TransposeNHWCToNCHW-LayoutOptimizer, sequential/conv2d/Conv2D/ReadVariableOp)]] Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info. [[{{node loss_11/add_2/_553}} = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0", send_device_incarnation=1, tensor_name="edge_926_loss_11/add_2", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]()]] Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

該当のソースコード

入力画像は128×128の画像です.

各データのshapeはコメントアウトで記載しております.

python

1tr_y = tf.cast(tr_y, dtype='float32') # Tensor("Cast:0", shape=(1392,), dtype=float32) 2te_y = tf.cast(te_y, dtype='float32') # Tensor("Cast_1:0", shape=(124,), dtype=float32) 3 4rms = RMSprop() 5model.compile(loss=contrastive_loss, optimizer=rms, metrics=[accuracy]) 6 7H = model.fit([tr_pairs[:, 0], tr_pairs[:, 1]], tr_y, # tr_pairs[:, 0].shape = (1392, 128, 128, 1), 8 # tr_pairs[:, 1].shape = (1392, 128, 128, 1) 9 10 epochs=20, 11 verbose=1, 12 steps_per_epoch = 10, 13 validation_steps = None, 14 validation_data=([te_pairs[:, 0], te_pairs[:, 1]], te_y)) # te_pairs[:, 0].shape = (124, 128, 128, 1), 15 # te_pairs[:, 1].shape = (124, 128, 128, 1)

試したこと

おそらくメモリ不足によるエラーだと思い,jupyter labのカーネルを初期化してから実行してみたり,入力する画像数の削減などを試しましたが同じエラーが吐かれます.

7952MiBのGPUサーバを使用しているので,このようにメモリ不足になるのが少し不思議です.

他に原因があるのかと思い質問させていただきました.

有識者の方,何か対処の仕方が分かりましたら教えていただきたいです.

補足情報(FW/ツールのバージョンなど)

サーバPCのスペック

OS : Ubuntu 16.04.5 LTS

CPU : Intel(R) Xeon(R) W-2123 CPU @ 3.60GHz

RAM : 32GiB

グラフィックカード : GeForce RTX 2080 x2

その他バージョン

Python : 3.5.2

TensorFlow : 1.13.0-rc0

Keras : 2.3.1